Barry McGee

on 2 February 2016

Trimming the fat from the Ubuntu online tour

Maybe, like me, you seen more of the inside of your gym in January than you had for the six months previous. New year, new diet, new me.. or something like that.

A big creeping problem in recent years is that websites have been on an all out binge, and not just over the winter holidays — big videos, big images, fancy fonts, third-party libraries — they just can’t get enough of ’em.

Average page weights increased by 15% in 2014 and although I haven’t yet seen any similar research done for 2015 yet, I’m willing to bet that trend did not reverse.

Last week I was tasked with making some performance optimisations to the Ubuntu online tour.

This legacy codebase stretches all the way back to 2012, and as such was not benefitting from some of the modern tools we now have at our disposal as web developers.

We have been maintaining our largest codebases such as ubuntu.com and canonical.com to ensure they are as performant as they can be but this Ubuntu tour repository slipped through the cracks somewhat.

We have users all over the world and many of them don’t enjoy the luxury of fat internet pipes that we enjoy in our London office. Time to trim the fat…

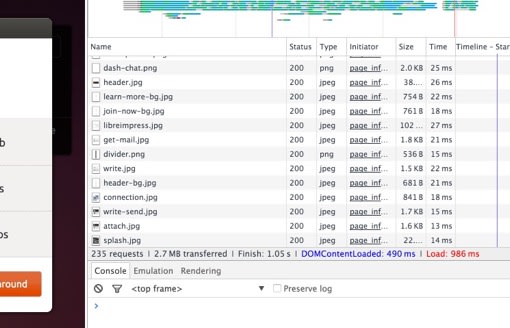

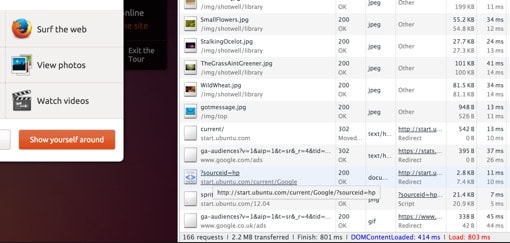

At first look, I noted on load of the site it required 235 HTTP requests to download 2.7MB of data. Chunky Charlie!

Delving into the codebase, I immediately spotted some big areas ripe for improvement:

- The CSS files were not being concatenated nor were they minified.

- The Javascript was also being loaded in separate files, also un-minified.

- The image assets were uncompressed.

- The HTML was un-minified.

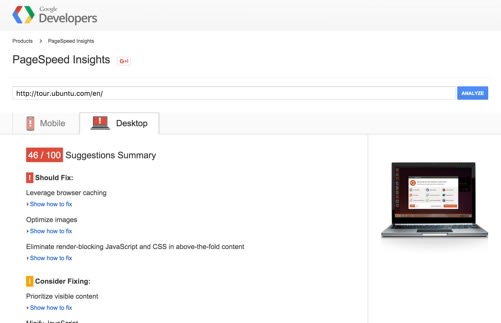

Beyond that – I ran the site URL through Google’s PageSpeed Insights and also discovered;

- Browser cacheing was not being being leveraged as static assets did not have any Expires headers specified

- There were quite a few CSS and javascript dependancies blocking rendering of the page.

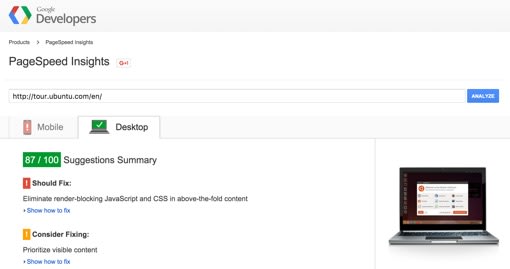

As you see, the site was only scoring a lowly 46/100, not great.

For jobs such as this, my first weapon of choice is the task runner, Gulp. It’s quick and easy to drop Gulp on top of any existing site and use some of it’s wide array of plugins to optimise source assets for performance.

For this job I used gulp-concat, gulp-htmlmin, gulp-imagemin, gulp-minify-css, gulp-rename, gulp-uglify, gulp with critical & gulp-rev.

Explaining how to use each of them is beyond the scope of this article but you can view my Gulpfile.js and accompanying package.json file to see what I did.

When retro-optimising a site, you might find you have to make certain compromises such as placing “src” folders inside folders you are optimising to store the original documents, then output the optimised versions into the original folder to ensure everything is backwards compatible and you haven’t broken any relative links. You should also be careful when globbing Javascript files as they may need to be loaded in a certain order to prevent race conditions. This is also true when concatenating and including Javascript libraries such as jQuery.

In an ideal world, you would not deploy any files from the repository you have compiled locally. They should be ignored by version control and compiled on the fly by running your task runner on the server using a continuous integration engine such as Jenkins or Travis CI. This is much cleaner and will prevent merge conflicts when multiple developers are working on the same codebase.

So — when we have all of the above configured and then run it over our legacy codebase, how much weight did it shave?

Good news! Now to load the site, we only need 166 HTTP (-29%) requests to download 2.2MB(-18%) of data. Slim(mer) Jim for the win!

This should mean our users with slower connections will have a much improved experience.

When we run the leaner site now deployed through Google Pagespeed Insights – we now get a much healthier score also.

This was a valuable exercise for our team and reminded us we not only have a responsibility to keep all our new and upcoming work performant but we should also address any legacy sites still currently in use wherever possible.

A leaner web is a faster web and I’m sure that’s something we can all get behind.