Jacek Nykis

on 20 July 2017

Run Django applications on the Canonical Distribution of Kubernetes

Introduction

Canonical’s IS department is responsible for running most of the company’s internal and external services. This includes infrastructure like Landscape and Launchpad, as well as third party software such as internal and external wikis, WordPress blogs and Django sites.

One of the most important functions of our department is to help improve our products by using them in production, providing feedback and submitting patches. We are also constantly looking for ways to help our development teams to run their software in easier and more efficient ways. Kubernetes offers self-healing, easy rollouts/rollbacks and scale-out/scale-back, so we decided to take the Canonical Distribution of Kubernetes for a spin.

Deploying the Canonical Distribution of Kubernetes

Inside Canonical, all new services are deployed with Juju and Mojo on top of our OpenStack clouds. The Canonical Distribution of Kubernetes is distributed as a Juju bundle, which made it a great starting point. We turned the bundle into a Mojo spec and added additional charms like the canonical-livepatch subordinate for live kernel updates and nrpe support for monitoring. Once the spec was ready we deployed it into a clean Juju 2 model with a single command:

mojo run

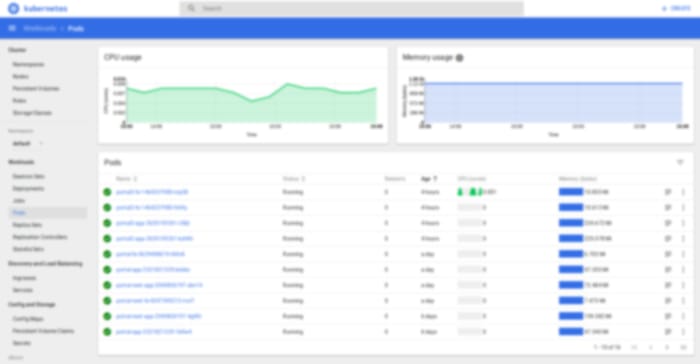

To make sure we can recover quickly from disasters we also set up a Jenkins job that periodically deploys the entire Kubernetes stack in a Continuous Integration environment. This gives us confidence that the spec stays in a deployable state all of the time. Once we had Kubernetes up and running it was time to pick an application to migrate. We wanted to start with an application that we use every day so that we would discover problems quickly. We also wanted to exercise multiple Kubernetes concepts, for example CronJob resources and ExternalName services. Our choice was to start with an internal Django site which we use to help manage ticket from customers.

Application migration

The application we picked is fairly standard Django code with an Apache frontend. For this first pass we decided not to migrate the database, allowing us to avoid need for stateful components in Kubernetes. The first step was to turn the Django application into a Docker container. To make this possible we had to update settings.py to support Kubernetes secrets. For simplicity, we chose to use environment variables and created stanzas similar to the following:

DATABASES = {

"default": {

"ENGINE": "django.db.backends.postgresql_psycopg2",

"NAME": os.environ['DB_NAME'],

"USER": os.environ['DB_USERNAME'],

"PASSWORD": os.environ['DB_PASSWORD'],

"HOST": "db",

"PORT": int(os.environ['DB_PORT']),

}

}

Next we needed to find a way for the container to talk to the external database – this was easily achieved using “ExternalName” service:

kind: Service apiVersion: v1 metadata: name: database namespace: default spec: type: ExternalName externalName: external-db.example.com

Then we simply copied our uwsgi configuration and ran the application like this:

CMD ["/usr/bin/uwsgi-core", "--emperor", "/path/to/config/"]

The next step was to create a Dockerfile for the Apache frontend. This one was slightly more tricky because we wanted to use a single image for the development, staging and production deployments, however there are small configuration differences between each one. Kubernetes documentation suggested that ConfigMaps are normally the best way to solve such problems and sure enough it worked! We added a new “volume” to each of the deployments:

volumes:

- name: config-volume

configMap:

name: config-dev

Which we then mounted inside the container:

volumeMounts: - name: config-volume mountPath: /etc/apache2/conf-k8s

And finally we included this in the main apache configuration:

Include /etc/apache2/conf-k8s/*.conf

The ConfigMap contains ACLs and also ProxyPass rules appropriate for the deployment. For example, in development we point at the “app-dev” backend like this:

ProxyPass / uwsgi://app-dev:32001/ ProxyPassReverse / uwsgi://app-dev:32001/

With all of above changes completed, we had the development environment running successfully on Kubernetes!

Further improvements

Of course we wanted to make code updates easier, so we did not stop there. We decided to use Jenkins for Continuous Integration and created two jobs. The first one takes a branch as an argument, runs tests and if they are successful, builds a Docker image and deploys it to our development environment. This allows us to quickly verify changes in an environment that’s set up in exactly the same way as production. Once a developer is happy with changes they submit a merge proposal in Launchpad, which gets reviewed and merged as normal. This is where the second Jenkins job comes in – it starts automatically on trunk change, runs tests, builds a Docker image and deploys it to our staging environment. Due to the nature of the application, we still want a final human sign off before we push to production, but once that’s done it’s a quick push-button process to go live.

What’s next?

We are investigating Kubernetes liveness probes to see if they can improve Django container’s failure detection. We also want to get more familiar with Kubernetes concepts and operation because we would like to offer it to the internal development teams and migrate more applications run by our department to Kubernetes.

Was it worth it?

Absolutely! Some of the biggest wins we captured:

- We provided lots of feedback to the Kubernetes charm developers which helped them make improvements

- We submitted multiple charm patches, including juju `actions`, sharing our operational experience with the community

- We are in a very good position to start offering Kubernetes to Canonical internal development teams

- We gained experience in migrating application to Kubernetes, which we will use as we move more services to Kubernetes