Miona Aleksic

on 12 June 2023

Minimising latency in your edge cloud with real-time kernel

Written by Juan Pablo Noreña

From applications in telecommunications to edge cloud and industrial digital twins, experimenting with real-time capabilities in cloud technologies is a trend in the industry. Applications for the edge often have an additional requirement as they interact with real-time systems: they need to run deterministically. It means that time constraints their execution and interaction within the system.

Canonical’s MicroClouds are a small-scale cloud deployment that hosts scalable and highly available applications, providing compute, secure networking, and resilient storage closer to the data sources. They are especially suitable for use cases where workloads need to be served near where they are needed, as with edge cloud deployments. Coupled with the Real-time kernel feature in Ubuntu, MicroClouds are a great solution for applications at the edge with stringent low-latency requirements.

This blog presents how to enable the Real-time kernel in MicroCloud hosts. It will also cover our real-time testing, comparing the performance of virtualised and containerised applications leveraging the real-time versus the generic Ubuntu kernel.

Deploy your MicroCloud

If you’re not yet familiar with MicroCloud, it is easy to get started. All you need is to install the necessary components with:

sudo snap install lxd microcloud microceph microovn

and follow the initialisation process as outlined.

Enable real-time nodes

After launching your MicroCloud, which can look like this:

ubuntu@ob76-node0:~$ lxc cluster listubuntu@ob76-node0:~$ lxc cluster list

NAME | URL | ROLES | ARCHITECTURE | FAILURE DOMAIN | DESCRIPTION | STATE | MESSAGE |

+-----------------------+---------------------------+------------------+--------------+----------------+-------------+--------+-------------------+

| ob76-node2.microcloud | https://172.27.77.19:8443 | database-leader | x86_64 | default | | ONLINE | Fully operational |

| | | database | | | | | |

+-----------------------+---------------------------+------------------+--------------+----------------+-------------+--------+-------------------+

| ob76-node3.microcloud | https://172.27.77.12:8443 | database-standby | x86_64 | default | | ONLINE | Fully operational |

+-----------------------+---------------------------+------------------+--------------+----------------+-------------+--------+-------------------+

| ob76-node5.microcloud | https://172.27.77.20:8443 | database | x86_64 | default | | ONLINE | Fully operational |

+-----------------------+---------------------------+------------------+--------------+----------------+-------------+--------+-------------------+

| ob76-node6.microcloud | https://172.27.77.11:8443 | database | x86_64 | default | | ONLINE | Fully operational

You can attach an Ubuntu Pro subscription to the hosts and enable the real-time kernel feature. In this blog, we enable one of the nodes as a real-time node:

ubuntu@ob76-node6:~$ sudo pro attach <your_pro_token>

ubuntu@ob76-node6:~$ sudo pro enable realtime-kernel

Then, edit the line beginning with “GRUB_CMDLINE_LINUX_DEFAULT” in /etc/default/grub and add the following set of parameters to tune the real-time host:

GRUB_CMDLINE_LINUX_DEFAULT="quiet splash skew_tick=1 isolcpus=managed_irq,domain,2-3 intel_pstate=disable nosoftlockup tsc=nowatchdog nohz=on nohz_full=2-3 rcu_nocbs=2-3"

Finally, update the grub command line with sudo update-grub. At this point, rebooting is required to apply the changes to the kernel and verify them:

ubuntu@ob76-node6:~$ uname -a

Linux ob76-node6 5.15.0-1034-realtime #37-Ubuntu SMP PREEMPT_RT Wed Mar 1 20:50:08 UTC 2023 x86_64 x86_64 x86_64 GNU/Linux

ubuntu@ob76-node6:~$ cat /proc/cmdline

BOOT_IMAGE=/boot/vmlinuz-5.15.0-1034-realtime root=UUID=449c88ff-8e2e-470e-9f04-4f758114573f ro quiet splash skew_tick=1 isolcpus=managed_irq,domain,2-3 intel_pstate=disable nosoftlockup tsc=nowatchdog nohz=on nohz_full=2-3 rcu_nocbs=2-3 vt.handoff=7

Launch a real-time system container

In the case of system containers, the guests share the host kernel and operating system, so no additional configuration is required inside the container. You can launch and pin a system container to one or multiple of the isolated cores and start running your real-time application.

ubuntu@ob76-node0:~$ lxc launch ubuntu:22.04 c-rt -c limits.cpu=2 -c security.privileged=true \

-c limits.kernel.rtprio=99 --target ob76-node6.microcloud

Launch a real-time virtual machine

In the case of virtual machines, it is possible to attach the same Ubuntu Pro subscription of the host to the guest and enable the real-time kernel inside the virtual machine:

ubuntu@ob76-node0:~$ lxc launch ubuntu:22.04 vm-rt --vm -c limits.cpu=2 \

-c limits.kernel.rtprio=99 --target ob76-node6.microcloud

ubuntu@ob76-node0:~$ lxc exec vm-rt -- pro attach <your_pro_token>

ubuntu@ob76-node0:~$ lxc exec vm-rt -- pro enable realtime-kernel

Again edit /etc/default/grub and modify the line beginning with “GRUB_CMDLINE_LINUX_DEFAULT”:

GRUB_CMDLINE_LINUX_DEFAULT="quiet splash skew_tick=1 isolcpus=managed_irq,domain,0 intel_pstate=disable nosoftlockup tsc=nowatchdog nohz=on nohz_full=0 rcu_nocbs=0"

Update the grub with sudo update-grub and reboot the virtual machine.

Testing and results

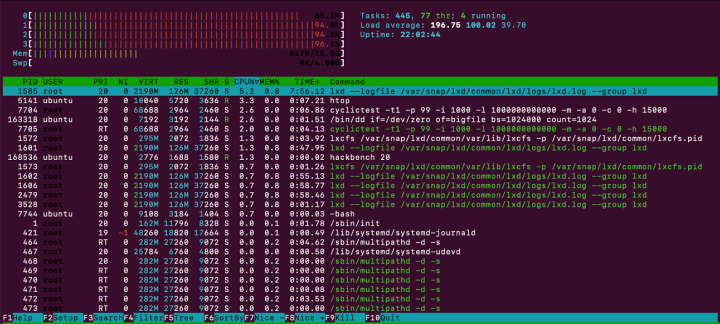

We used the cyclictest from the rt-tests suite to measure the latency experienced inside a system container and a virtual machine as workloads. Along the instances, we simulated load on the host as a Worst Case Latency Test Scenario to obtain accurate results for stressful environments.

Generate heavy CPU load on the host node under test using backbench:

ubuntu@ob76-node6:~$ sudo apt update -y && sudo apt install rt-tests -y

ubuntu@ob76-node6:~$ while true; do /bin/dd if=/dev/zero of=bigfile bs=1024000 count=1024; done & \

while true; do /usr/bin/killall hackbench; sleep 5; done & \

while true; do hackbench 20; done

Testing Instances

Get a console to the real-time virtual machine and install rt-tests:

ubuntu@ob76-node0:~$ lxc exec vm-rt -- /bin/bashroot@vm-rt:~# apt update -y && apt install rt-tests -y

Finally, run a cyclictest:

root@vm-rt:~# cyclictest -t1 -p 99 -i 1000 -l 1000000000000 -m -a0 -c1 -h 15000 > \

rt-test.output

It can be done similarly in the real-time container:

ubuntu@ob76-node0:~$ lxc exec c-rt -- /bin/bashroot@c-rt:~# apt update -y && apt install rt-tests -y

root@c-rt:~# cyclictest -t1 -p 99 -i 1000 -l 1000000000000 -m -a0 -c1 -h 15000 > \

rt-test.output

Performance comparison

We performed the tests in a MicroCloud built over Intel(R) Nucs.

| Processor | Intel(R) Core(TM) i5-5300U CPU @ 2.30GHz |

| OS | Ubuntu 22.04.2 Jammy |

| Generic (gen) kernel | Linux 5.15.0-69-generic #76-Ubuntu SMP |

| Real-time (rt) kernel | Linux 5.15.0-1034-realtime #37-Ubuntu SMP PREEMT_RT |

The following table shows the expected latency between system containers and virtual machines in generic and real-time environments under the stress conditions simulated using hackbench:

| Kernel | Instance type | Min latency | Avg latency | Max latency(Worst Case Scenario) |

| Host w/ gen kernel | bare-metal | 3 μs | 12 μs | 1496 μs |

| Host w/ gen kernel | container | 3 μs | 20 μs | 2118 μs |

| Host w/ gen kernel – Guest w/ gen kernel | virtual machine | 4 μs | 105 μs | 13579 μs |

| Host w/ rt kernel | bare-metal | 3 μs | 4 μs | 17 μs |

| Host w/ rt kernel | container | 3 μs | 7 μs | 21 μs |

| Host w/ rt kernel – Guest w/ gen kernel | virtual machine | 4 μs | 15 μs | 4472 μs |

| Host w/ rt kernel – Guest w/ rt kernel | virtual machine | 4 μs | 13 μs | 2282 μs |

Conclusion

Real-time applications in cloud technologies can sound like an oxymoron, however, when Canonical MicroClouds meet the real-time kernel feature in Ubuntu, it is possible to get a deterministic behaviour in virtualised workloads.

In other words, this allows the cloud in question to accurately deliver on its latency requirements, even if the underlying host is under stress, making it an effective solution for edge deployments with strict latency expectations.

MicroCloud offers two alternatives to hosting workloads, system containers and virtual machines, offering different strengths depending on the use case. In the case of real-time applications, system containers with the enabled real-time feature showed the lowest average and lowest max latency, making it the recommended choice for your workload. However, running a privileged container needs to be considered carefully. It requires additional security considerations, like ensuring that you are running trusted workloads or that no untrusted task is running as root in the container.

As the benchmark shows, MicroClouds coupled with real-time kernel can leverage critical applications in industries with real-time constraints deployed with all the essential features the industry seeks in cloud technologies.

This whitepaper is a good starting point if you’d like to learn more about MicroClouds in edge cloud environments.

If you’re curious about the real-time kernel, this blog series or the webinar introducing real-time Linux is for you.