Fouaz Bouguerra

on 17 December 2021

Data centre networking: SmartNICs

This blog post is part of our data centre networking series:

- Data centre networking : What is SDN

- Data centre networking : SDN fundamentals

- Data centre networking : SDDC

- Data centre networking : What is OVS

- Data centre networking : What is OVN

- Data centre networking : SmartNICs

With the explosion of application traffic and the multiplication of data centre workloads during the last decade, east-west traffic greatly increased and ended up impacting the traditional north-south based architectures. This raised the need to review the entire data centre architecture while keeping the goal of meeting performance, security, and monitoring requirements.

From a performance and security perspective, rather than shunting the traffic to centralised security and management blocks within the data centre (which might cause performance issues) or accepting uncontrolled zones, the forwarding and security intelligence can be distributed throughout the data centre.

Innovations like DPDK already provided an efficient way for CPUs to run network and security functions and improve their performance. An even better approach is to have server hosts equipped with a new generation of processors close to the workloads and capable of offloading key functions such as traffic forwarding, firewalling, deep packet inspection, encryption/decryption, and monitoring. These processors are known by different names depending on the chip manufacturer. They are typically called SmartNICs, Distributed Services Cards (DSCs), or Data Processing Units (DPUs). The leading chip manufacturers who are active in this space are NVIDIA, Broadcom, Marvell, Intel, Netronome, and Pensando. They have been transforming traditional NICs into programmable, more powerful and flexible platforms that offload and accelerate core networking, storage, and security functions.

SmartNICs run directly on the server host and can offload network and security functions, allowing the CPU to better dedicate all its cores to application processing and performance. Without getting into a comparison between SmartNIC vs DPU, Let’s try to understand what SmartNIC is.

What is a SmartNIC?

SmartNIC is an intelligent network interface card that is a data processing unit in itself. It operates like a server inside a server, where the NIC can communicate directly with the VM without having to do interruption handling in the kernel layer. When a packet is sent to a conventional NIC, there is an abstraction layer in the kernel where it performs interruption handling and reverse context switching to pull the packet from the ring buffer to the NIC then sends it over to VMs.

When several hundreds of VMs are running within a server with a normal NIC, each VM emulates the NIC from the host machine and performs interruption handling on the kernel. This is an intensive power and CPU-consuming operation, and traffic forwarding performance is impacted even when the NIC throughput is increased.

SmartNICs/DPUs are more flexible than traditional, function-static ASICs. Organisations can also appreciate using the power for other existing servers and functions in the data centre. And because SmartNICs run inside the servers, organisations can deploy virtualized network and security functions instead of purpose-built hardware.

SmartiNIC benefits

SmartNICs are the state-of-the-art solution to provide network and storage virtualization in data centre and cloud environments. Leading cloud providers already use custom SmartNIC designs like AWS Nitro or Azure SmartNIC. SmartNICs provide isolation, security and increased performance, which is synonymous with greater energy and cost efficiency.

Recently a new set of commodity SmartNIC products have become available, like BlueField from NVIDIA, Broadcom Stingray, or Pensando DSC.

SmartNICs distribute intelligence throughout the data centre and provide several benefits as well:

- Provide security controls that are customisable and close to the workload. This is achieved through tailored rules for local workloads rather than configuring a high number of security rules to cover all the requirements of hundreds of applications across a set of separate firewalls.

- Performance improvement by offloading security and network functions to specialised accelerators, freeing the CPU to focus on serving applications.

- Improved power efficiency: The ARM and FPGA computing resources typically found in SmartNICs have been demonstrated to be more power-efficient.

- Reduced complexity where management and security functions can run locally. In addition, SmartNICs can be managed centrally, which means that even if intelligence is distributed through the data centre, IT teams have a global visibility and control over them.

- They provide a broad set of storage and network virtualization options:

- Overlay networks e.g. VXLAN

- Embedded switch

- NVMe emulation

- Virtio-queue support (block and network)

- Encryption/ Decryption

- Packet filters / deep packet inspection

OpenStack and OVN on SmartNIC DPUs

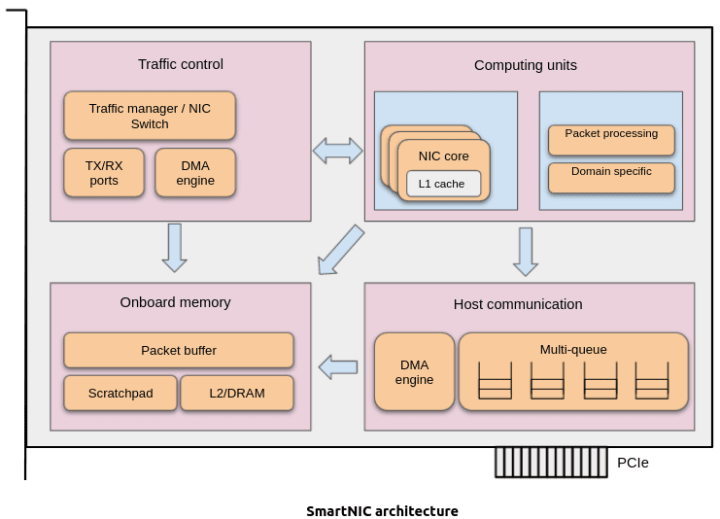

A DPU consists of a high-performance network controller capable of hardware acceleration of virtualised workloads and a multi-core CPU which can run a generic operating system and control plane applications.

The DPU network controller is presented to the hypervisor operating system as a set of physical functions (PFs) and virtual functions (VFs) which can be used for control plane communication and to provide hardware-accelerated virtual network controllers to workloads. The DPU NIC also contains an embedded switch (NIC switch) which can be programmed by the general-purpose CPU of the DPU.

The DPU’s CPU can program PFs and VFs exposed to the hypervisor host by the means of port representors which can be plugged the virtual switches offloaded to the NIC. However, compared to the non-DPU scenario, the representors are programmed on a different host from the hypervisor host. Therefore, off-path SmartNIC DPUs introduce an architecture change where network agents responsible for NIC switch configuration and representor interface plugging run on a separate SoC with its own CPU and memory, and that runs a separate OS kernel.

As a result, there are additional control plane mechanisms to put in place in order to orchestrate accelerated port allocation between the DPU and the hypervisor.

Canonical has been working to introduce the necessary changes into the upstream OVN, OpenStack and Libvirt projects in order to allow for seamless DPU usage with those control plane challenges addressed.

The NIC switch is also not exposed to the hypervisor host, therefore, it is not possible to program it from the hypervisor side.

This calls for additional logic to be present at the SmartNIC DPU host side, which would be more reusable if made independent of OpenStack.

Canonical has been working closely with NVIDIA for many years to fuel innovation and support open source software with the power of accelerated processing. That already allowed us to jointly deliver GPU acceleration into Linux, OpenStack, and container workloads on traditional data centre servers. In the SmartNIC space, we’ve also been working together with NVIDIA on hot topics like enabling Ubuntu on their BlueField DPUs and their onboarding, and OVS/OVN offload.